As AI continues to develop impressive capabilities and integrate into every aspect of our lives, it is crucial to establish its boundaries. In Europe, this responsibility falls to the AI Act, which aims to legally regulate AI within European territory. Additionally, it is essential to implement a system for compliance evaluation and standards. It is essentially a metrology similar to that for any other product. The French Laboratory for Metrology and Testing (LNE) launched LEIA, (the Artificial Intelligence Evaluation Laboratory) to evaluate AI software solutions and physical devices.

AI is a revolutionary technology that has already permeated all aspects of our lives. Today, AI is ubiquitous and used by everyone. With the advent of generative AI, its capabilities are rapidly advancing. Every day, there is an update to ChatGPT, the most widely used generative AI by the public, or the emergence of a new AI startup like this week’s launch of French start-up H.

Metrology: The Science of Measurement for AI

However, AI also brings concerns. Europe has equipped itself with a regulatory framework to control AI usage within its borders, known as the AI Act. However, evaluating AI like any other market product is also necessary. This is where metrology, the science of measurement, comes in. Metrology is expected to play a crucial role in creating trustworthy AI, explains Thomas Grenon, General Director of the LNE:

“Testing the efficiency, robustness, safety, and ethics of AI requires metrology, a particularly complex science of measurement. This opens a new branch of measurement sciences.”

While AI holds promise and hope, it also raises concerns. This is where LEIA (the Artificial Intelligence Evaluation Laboratory) comes into play. Named after the princess from the Star Wars saga, it aims to address the concerns surrounding this technology by creating a framework of trust through metrology.

“To address the essential challenges of sovereignty, innovation, and competitiveness, LEIA was envisioned in 2018. It is a crucial tool for supporting the growth of intelligent technologies, not only to measure, compare, and improve their efficiency but also to ensure compliance with our values—our ethical values, our French values, and our European values.”

READ MORE

LEIA

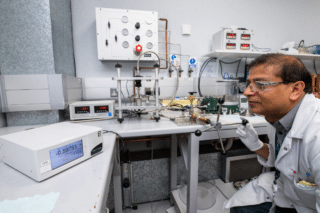

The LNE is currently deploying a unique infrastructure in Europe. LEIA is a multi-approach laboratory for the metrological characterization of intelligent systems.

LEIA enables the evaluation of AI-based software solutions and physical devices incorporating AI, to characterize their reliability and secure their use. The lab will also ensure their ethical nature. For example, it will evaluate if all data is treated fairly and if the information is disclosed to the user accurately. With LEIA, the LNE wants to position itself as a trusted third party. The goal is to guarantee trustworthy AI in French and European territories, protect consumers, and preserve industry competitiveness.

LEIA consists of three platforms offering various test environments to subject AI to various exhaustive and realistic scenarios.

- LEIA Simulation, deployed in 2023, allows the testing of an intelligent system on all possible criteria through digital simulation.

- LEIA Immersion, inaugurated last week immerses the AI system in a simulated reality through video projection.

- The third platform currently under development, is LEIA Action. It will immerse the system in real physical situations.

RELATED ARTICLES

Evaluating AI Systems Embedded in Robots

What exactly is being tested, and how? AI technologies rely on deep learning and statistical learning with billions of parameters, often referred to as black boxes. We can see the inputs and outputs, but the internal workings are a mystery. This makes testing essential to understand their functionality.

However, this task is incredibly complex. AI systems exhibit nonlinear behavior, operate in undefined domains, and adapt and learn continuously throughout their lifecycle.

This is where the LNE steps in, developing evaluation tools for both current and future AI systems.

Stéphane Jourdain, Head of the Medical Environment Testing Division at LNE, leads a team of engineers and PhDs responsible for conducting these tests.

“The first question we ask is what task the AI needs to perform, and then we test how well it accomplishes this task. The LEIA Immersion platform is dedicated to AI embedded in autonomous robots that move and make decisions in varied environments we can provide to our clients, such as hospitals, retirement homes, vineyards, factories…. We use a motion tracking system to verify how the robot moves in space, following its trajectory. If the robot is equipped with an arm and needs to grab an object in the projected environment, we can track it, ensuring it moves in the right direction, at the right speed, and adheres to safety distances.”

The lab can evaluate all types of robots, including assistive robots and AI in medical devices, as well as logistics robots, drones, and autonomous vehicles.

LNE has also evaluated weeding robots for the agricultural sector. The goal was to define benchmarking procedures to estimate the performance and impact of autonomous solutions for agriculture. This involves systematic testing principles that LNE has mastered for a long time.

“Previously, with the LEIA Simulation platform, we could create virtual models of AI systems, which only existed as software programs. We would input data into these models and analyze the results. This approach, known as data-based or simulation-based evaluation, has been our practice for a long time, relying on extensive server capabilities and data storage. With LEIA Immersion, we have taken a significant step forward. This new platform allows us to test AI systems more tangibly and realistically. Instead of just analyzing software, we now evaluate AI embedded in autonomous mobile systems by placing them in highly detailed, simulated environments. This immersive testing helps us better understand how these AI systems perform in real-world scenarios.”

In the future, new modules and simulation models will be gradually added, particularly for evaluating generative AI.

Over 1,000 AI Systems Already Evaluated

The LNE is no stranger to AI evaluation. The lab has assessed over 1,000 AI systems for industries and public authorities across sensitive fields such as medicine, defense, and autonomous vehicles, as well as in the agri-food sector and Industry 4.0.

In 2021, to prepare for the implementation of the AI Act, the LNE developed the first framework for the voluntary certification of AI processes. These frameworks are designed to ensure that AI solutions entering the market adhere to a set of best practices, covering design processes, algorithm development, data science, and considerations of both business context and regulatory environment.

However, currently, the laboratory is not tasked with AI certification, as emphasized by Stéphane Jourdain:

“Today, there is no existing regulatory framework for AI certification. The AI Act is a regulation, and like any regulation, it outlines expectations but does not provide specific methods. For example, a regulation might state that a machine should not be dangerous, but it doesn’t specify how to verify this. When you buy any household appliance, it must meet certain regulatory standards, which are verified through testing norms that specify the criteria. For AI, no such norms exist yet.”

The LEIA is poised to play a crucial role in the development of Testing and Experimentation Facilities (TEFs), the reference sites established by the European Commission. These facilities will assist industries in testing their technologies and support the deployment of sovereign and ethical AI in Europe.

LNE’s Clients

Currently, the LEIAs primarily serve SMEs and startups that lack the internal resources to evaluate their AI, explains Thomas Grenon:

“Today, few companies, especially startups, have the infrastructure to validate their intelligent systems and improve their performance. The LEIA fills this gap and complements the LNE’s offerings in this field.”

To support these smaller businesses, LEIA offers a preferential rate for validating their AI, with discounts ranging from 20% to 40%. However, Stéphane Jourdain notes that other companies might also be interested in these tests before regulations eventually require them to comply.

“We have companies that seek our evaluation because it offers a competitive advantage to say they’ve been assessed by an independent and trusted third party. Then some want to buy AI solutions but don’t know which ones to choose. So they fund several evaluations for one to three years, and in the end, they benefit from the results to choose the best solution.”

Public Investment

AI has the potential to transform our economy, daily life, health, transportation, and communications. But according to Georges-Etienne Faure from the government’s General Secretariat for Investment (SGPI),

“Technology is neither good nor bad. It is powerful when it performs well and therefore needs to be controlled. To control it, it must be evaluated and with trust. Trustworthy AI must also be sustainable from a societal and environmental perspective, and we must solve this equation together.”

French President Emmanuel Macron invited AI experts to the Elysée Palace on Tuesday, May 21. He reaffirmed that artificial intelligence is a strategic priority for France, allocating nearly €2.5 billion from the France 2030 plan. He announced an additional €400 million in public investment for AI research and training.

Notably, he tasked the LNE and the National Institute for Research in Digital Science and Technology (INRIA), another organization we had the opportunity to interview, with the mission of jointly building a national AI model evaluation center. Macron said:

“This new AI evaluation center aims to become one of the world’s largest centers for AI model evaluation.”

In February 2025, France will host the AI Action Summit, bringing together the major AI players from around the world.