In healthcare, artificial intelligence holds the promise of delivering remarkable technological advancements, particularly in detection capabilities. However, in Europe, the progress of AI models encounters a significant hurdle—limited access to data. How can we effectively navigate the complexities of health data governance in the realm of AI development? Is it possible to cultivate AI systems that are not only high-performing but also uphold ethical standards, respecting patient rights and confidentiality? Responding to these challenges, an increasing number of researchers and companies are steering towards the development of federated AI models.

Would training AI without sharing data be the solution to the challenge of maintaining data confidentiality in healthcare? The rising star in this regard is Federated Learning. This technology allows the training of high-quality models using data distributed across independent centers. Instead of consolidating data on a single central server, each center keeps its data secure, while the algorithms and predictive models move between them.

A Growing Interest in Federated Learning

The CEA-List, the research institute affiliated with CEA, is banking on this with a project that combines federated learning and blockchain. Their goal is to create a platform where businesses and developers can collaborate to train AI models without the need to share their sensitive information.

The French biotechnology company Okwin is also banking on federated data access. They have developed AI models that accurately predict the response of mesothelioma patients (an aggressive cancer primarily affecting the lungs) to neoadjuvant chemotherapy. This achievement is based on interpretable AI data stored in four French hospitals.

The French National Institute for Research in Digital Science and Technology (INRIA) is also working on this innovative AI model to address the issue of data governance and to escape the biases of single-centric studies.

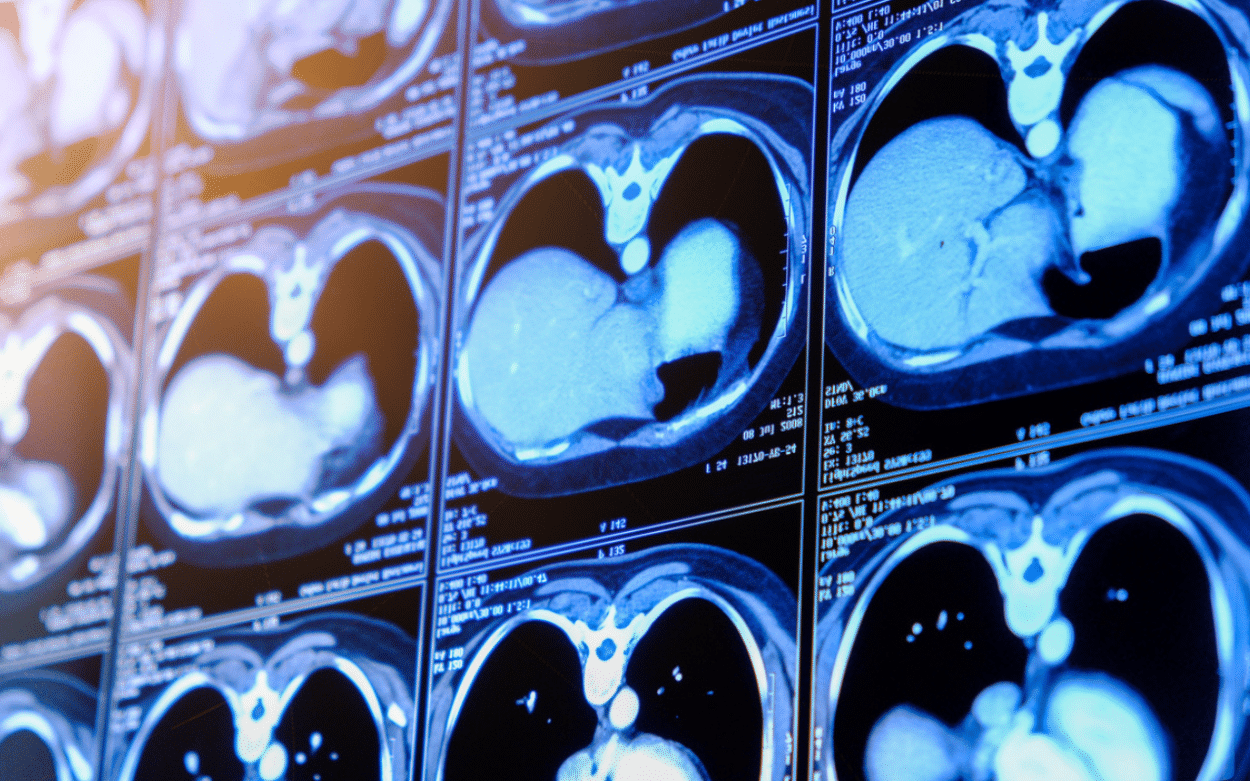

All these different initiatives are paving the way for a more robust and ethically aligned AI landscape. Because, there is a need for trust, especially in the healthcare sector. The benefits of AI in detection no longer need to be demonstrated. Instead of relying on a radiologist to visually identify elements in an image, we can now employ automatic AI approaches. They are capable of recognizing, and sometimes even outperforming radiologists, in detecting the presence of pathological signs. But the key is to do this while respecting patient data.

FEDERATED-PET: A More Robust and Ethical AI Landscape

We met Marco Lorenzi, from the INRIA during the Sophia Summit a couple of weeks ago in the South of France. In his lab, he and his team are currently building a global AI model by utilizing AI models developed within different hospitals. Their one-million euros project, FEDERATED-PET, initiated one year ago and spanning a total of four years, focuses on developing an AI capable of analyzing whole-body PET images to characterize metastatic lung tumors and predict the response to immunotherapy.

“Instead of asking hospitals to share their data with us for developing AI, we request each hospital to contribute by developing small portions independently – creating weakly trained models. Meanwhile, we are working on technologies to aggregate models instead of data. What we are developing is a set of mathematical rules that tell us, when we have a new image, what to look at and how to abstract from this information a decision. Yes, no, there is cancer or not. This is a model, so it’s a set of parameters, and that’s what we share, not the data. The data stays in the hospital. So, if I have the parameters from hospital A and the parameters from hospital B, ideally, I can then average these parameters, and that creates the global AI.”

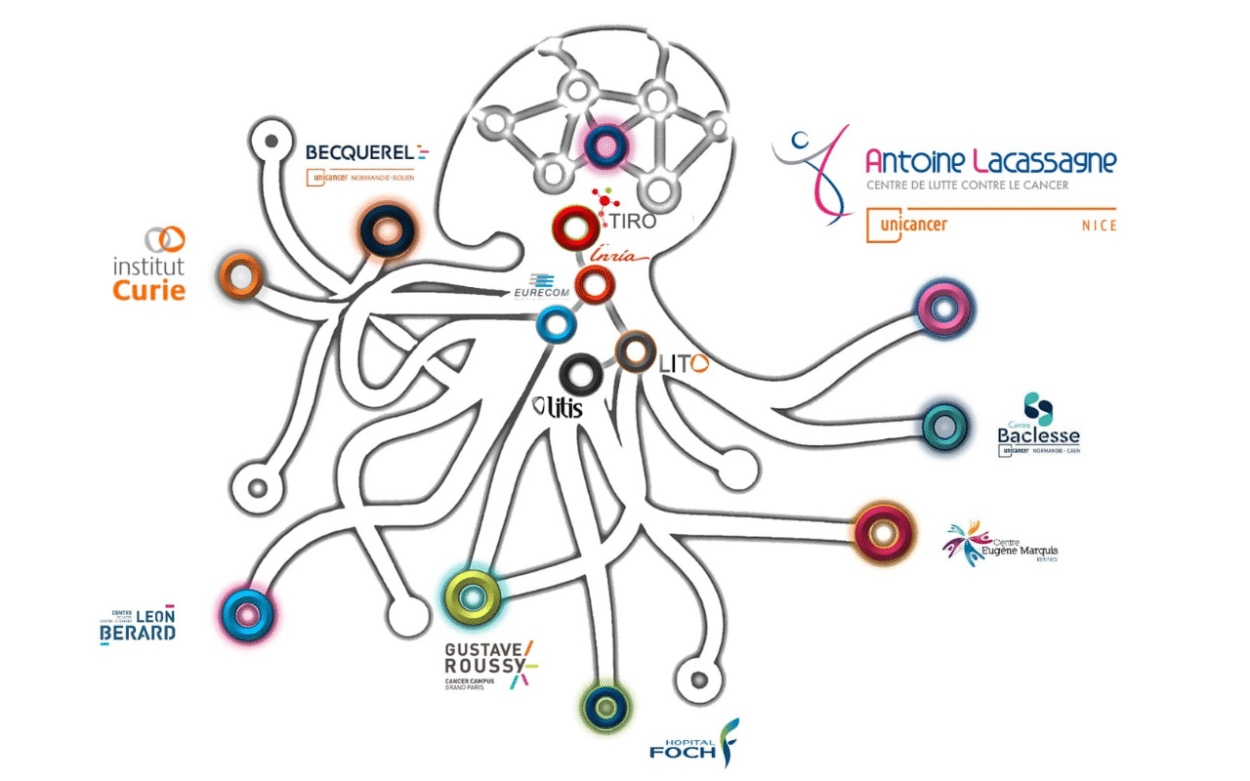

Currently, INRIA is collaborating with 10 pilot hospitals in France (including the Institut Curie in Paris and the Lacassagne Hospital in Nice). The project involves 1,300 patients (around 100 patients per hospital).

The Federated Learning Solution: Addressing Governance and Reliability Issues

How does FEDERATED-PET work concretely? Everything starts in the hospitals. After identifying eligible patients, hospitals collect clinical data and images, such as PET scans. Physicians validate the patient selection’s accuracy and assess data quality, leveraging their familiarity with the hospital’s data. This ensures representative patient samples for effective model training, explains Professor Olivier Humbert. He is the head of the nuclear medicine department at the Lacassagne Hospital in Nice, on the French Riviera. He is the coordinator of this project in collaboration with INRIA. The development of AI is currently taking place in the oncology department of his hospital.

“We are working with data from patients who have already undergone treatment to determine whether the treatment was successful or not. This involves identifying the 130 patients in the hospital who are eligible for the project. They are selected based on having the specific type of cancer we are investigating, such as lung cancer, and having undergone the type of treatment we are exploring, which is immunotherapy. Visual inspections are conducted by physicians with the naked eye, to ensure a great level of quality. Then the gathered data, including clinical information and PET scan images, is organized into databases.”

Once the data is organized, it is placed onto the GPU server that is installed in each participating hospital. This server is linked to the federated learning software, an open-source tool called FedBioMed developed by INRIA. The software plays a crucial role in the process, receiving instructions and facilitating accessible technology for the entire operation.

RELATED ARTICLE

Improving Security

The FEDERATED-PET infrastructure is essentially a communication system with a connected central server, operating in a star-like configuration. The central node, currently located at INRIA, receives models from each hospital via secure pathways and coordinates the training phase. The researchers send instructions to the hospitals, telling them to train the models, and the hospitals send back their results.

According to Marco Lorenzi, federated AI addresses the issues of both data governance and data reliability:

“We no longer ask hospitals to open their data; they remain where they are. What we share is the AI. So, we solve a governance problem. Then, we can aggregate these different AIs coming from hospitals to develop a collective global AI. Ideally, this AI can be equivalent to the model we could have developed if we had access to all the data.”

Improving Reliability

The other advantage of federated learning is that it brings models closer to reality, thereby improving their reliability and robustness.

The challenge in healthcare is to access data that is representative of the entire variation present in the general population. This means moving beyond specific hospital departments with small databases to large, massive databases. The issue is that hospitals do not have the availability of such extensive data. They do not have hundreds of thousands of patients. So it is necessary to gather data from different sources, from different hospitals.

“Today, we know that AI performs very well, especially on data that closely resembles the data on which it was originally developed. However, this also brings with it any biases present in the original data. All of these factors create significant challenges in terms of trust, reliability of AI, and its generalizability for real-world use, where it is uncertain whether the data observed accurately represents the original database.”

For example, to develop ChatGPT, OpenAI was able to access databases with billions of images and documents. It is more complex in healthcare. This is why Marco Lorenzi believes that,

“With this new paradigm, each AI represents specific knowledge from a particular hospital. Ideally, when we combine them, we gain an overview of collective variability, resulting in a model that is robust to all variations.”

Adding Noise and Homomorphic Encryption for Securing Models

According to Marco, the INRIA has already trained a model on imaging data using the platform, and all tests have yielded positive outcomes. However, practical concerns, such as model security, still need to be addressed.

While federated learning resolves a governance issue by ensuring data doesn’t travel, it doesn’t provide a 100% guarantee of security in sharing AI models.

“We can add noise to the parameters with mathematical rules that give us some assurances. If we introduce noise, it means that whether the data was there or not, after training, we wouldn’t be able to distinguish. Hence, the model is somewhat more anonymous concerning the data.”

Another possibility is encrypting the model or using homomorphic encryption. The concept is that if there is an encrypted Model A and an encrypted Model B, INRIA’s researchers won’t be able to see what’s inside, but will still be able to mathematically aggregate correctly.

“The global model is accessible, but the model from each hospital where they were shared remains inaccessible, thereby protecting the information at its source. Therefore, we extract the correct result even if we couldn’t access the original model.”

While technical solutions can be implemented to further protect data and models, a significant challenge remains: hospitals must be willing to participate, warns Lorenzi:

“Hospitals are the key players in AI production; they need to be capable of developing models independently. This is the price to pay for not sharing data, and achieving this is far from realistic in practice.”

Developing AI Inside Hospitals

We asked Professor Olivier Humbert, the head of the nuclear medicine department at the Lacassagne Hospital in Nice and the coordinator of the project. He acknowledges that training AI models is challenging for the medical community. He emphasizes that doctors need to be willing participants, finding time amid their daily tasks in hospitals and at a time when the French healthcare system is under considerable strain.

“Doctors have enormous workloads, so it requires their voluntary commitment and time dedication. This is a challenging aspect. Out of the ten projects, one or two hospitals have switched because it became apparent that the doctors couldn’t manage, we observed that the response time was not quick enough.”

“We Want to Be Part of It”

Despite the complexities, he believes the effort is worthwhile, and the doctors involved are currently very satisfied with the outcomes.

“What is particularly fascinating is that while there is generally a level of caution surrounding AI, especially due to potential risks in hospitals, the federated concept has generated unprecedented enthusiasm. The hospital and medical community have embraced this project wholeheartedly. In multiple presentations I have made, the response has consistently been, “We want to be part of it.”

What doctors appreciate about the federated approach, he said, is that hospitals are genuinely involved and actively participate. Regular meetings are held every six months, providing project updates, and the hospitals verify the quality of their data. There is a true sense of integration in the project, retaining control over what is being done, and receiving feedback. When the algorithm becomes transparent about issues encountered, whether it performs well or not, it will be tested with their active involvement. The concept of federating is designed with security in mind, avoiding the release of sensitive hospital data. But it also keeps medical teams at the core of the project; which is equally important.

“A common criticism of AI projects in healthcare is that companies often retrieve patient data, extracting it from hospitals, and then the subsequent developments of the project become unknown. There’s a lack of visibility into how the algorithm is trained, how it operates, and how it has been evaluated. The concern is that we might see a commercial solution in five years if we’re lucky, and this poses a significant problem. However, if AI training takes place within hospitals, it ensures that the AI remains under control and is utilized to improve patient care rather than optimize everything without consideration. And this aspect is widely appreciated.”

AI Training for Doctors

According to Professor Humbert, the medical community recognizes that AI is not merely a passing trend but instead a technological revolution. He believes that undergoing training will enable them to better comprehend the significance of AI and adeptly navigate its complexities. This is why he developed a comprehensive training program designed for all healthcare professionals. This university diploma consists of 120 hours of coursework to help doctors grasp AI concepts.

“Having a structured training program for doctors is crucial. The program is hosted at my university in Nice and is open to all healthcare professionals, including dentists, pharmacists, and physiotherapists. Our AI training features sessions conducted by mathematicians.”

According to him, the doctors consistently express satisfaction with the program.