ChatGPT is probably one of the most remarkable digital advances of the year 2022. Developed by California-based OpenAI, this conversational chatbot uses generative AI to respond to people’s queries. Since its opening to the general public, it has aroused unbridled enthusiasm. But these tools are not harmless and raise many questions.

OpenAI is a research company co-founded by Elon Musk (he has since exited the company) which released a couple of weeks ago a very convincing AI-powered chatbot. ChatGPT (Generative Pre-trained Transformer) is able to generate news articles, fiction, and computer code from text recognition. Just type a request and the program runs in about 1 minute.

ChatGPT has been making the buzz since its opening to the general public. The new chatbot has the ability to simulate human conversations in a convincing way and can provide long-form content in English and also in other languages such as French. Millions of people have tested it, asking it to write poems, articles, computer programs, etc. And ChatGPT completed the task in an impressive way.

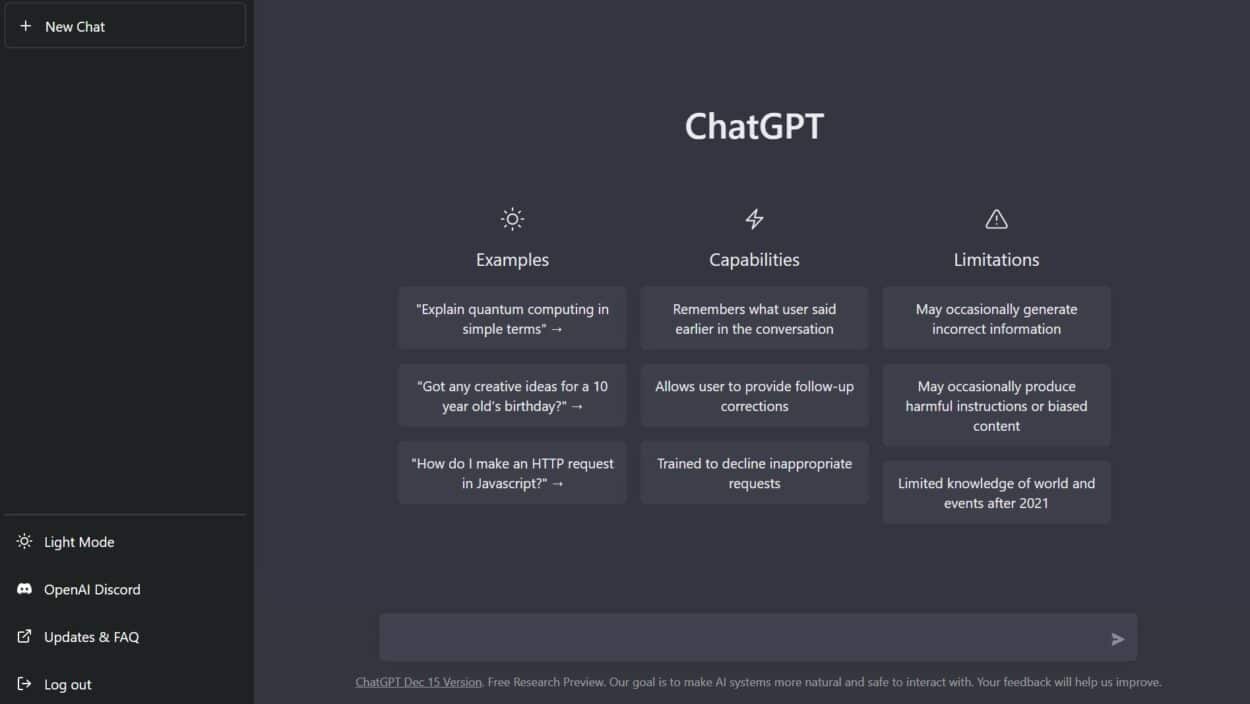

Conversation With ChatGPT

We too, at DirectIndustry, started a conversation with ChatGPT and there is no doubt that at first sight, the result is impressive. We first asked it to introduce itself :

“GPT (Generative Pre-trained Transformer) is a type of language generation model developed by OpenAI. It is a neural network-based model that uses machine learning techniques to generate natural language text that is similar to human writing.”

It then added that it is

“trained on a large dataset of human-generated text, such as books, articles, and websites. It uses this training data to learn the patterns and structures of language, and can then generate new text that is coherent and similar in style to the training data.”

We decided to verify its skills and we asked it to write an article about the advantages of artificial intelligence in the industrial world. It was able to produce this text in less than a minute. No doubt that there is nothing wrong with this text except that it is very basic and does not include any interviews, figures, examples, or insights.

A Disruption

But ChatGPT is nonetheless a disruption. First, this easy-to-use tool for the masses goes beyond what was available so far in terms of variety of content and in terms of speed delivery. In less than a minute, ChatGPT can provide a wide range of texts from poems to short articles and even coding.

According to Laurence Devillers professor of artificial intelligence & ethics at Paris-Sorbonne University and at Computer science laboratory for mechanics and engineering sciences at the Scientific Research National Center,

“This isn’t the first conversational AI that can respond on any topic. There has already been LaMDA from Google, which is the main competitor of OpenAI. But it is the first time that we have an AI tool accessible to everyone that is capable of doing several tasks: writing, programming in Python, and working on the programming language. So indeed, this is a disruption.”

ChatGPT is just the first of many similar chatbots that will soon be available. As their capabilities are growing exponentially, what are the benefits of these systems?

Advantages

The benefits of such systems are obvious. Artificial intelligence can support decision-making, help save time and improve the well-being of society as a whole. Industry has already been using AI for years. More than half of Europe’s top manufacturers are already implementing at least one AI use case in manufacturing operations, according to 2019 research from the Capgemini Research Institute. Opportunities abound for AI in manufacturing, from intelligent production automation to warehousing and distribution. We wrote many articles on this topic in this publication.

Emma Taylor, Associate Analyst at GlobalData, sees other types of applications:

“[ChatGPT] might be capable of challenging search engines such as Google. ChatGPT can supply entirely tailored and specific answers to very particular questions—all without ads—offering it an advantage over current search engines.”

For her colleague Sarah Coop, Analyst at GlobalData,

“There is plenty of promise for the app, with a little further development, it could have major implications for things like customer service as it can replicate such human-like text.”

RELATED ARTICLE

Mistakes or Lack of Knowledge

But such systems raise a lot of questions. First, ChatGPT is error-prone.

According to Sarah Coop,

“The generative AI tool is impressive at producing long-form written content at lightning speed, but results are varied and can be problematic. Stack Overflow, the popular question-and-answer site for coding and programming problems, has already banned answers generated by ChatGPT due to the probability of receiving incorrect answers.”

Indeed, ChatGPT makes mistakes. And if it does, it is because it is based only on information with which it has been trained until 2021. It is not connected to the Internet and therefore cannot automatically update its data in real-time. The answers it provides are therefore by definition outdated.

When we asked it to write a text about the latest great innovation regarding nuclear fusion [American scientists successfully produced a nuclear fusion reaction a few weeks ago], this is what he said:

“I’m sorry, but I am not able to write about events that have not yet occurred as my knowledge is limited to information that was available up until 2021. Additionally, I am not able to browse the internet or access any new information, so I am unable to provide updated information or verify the accuracy of information that is not within my existing knowledge base.

The same incompetence was observed when we asked a simpler question about the FIFA World Cup and the new world champions.

Ethical Problems

ChatGPT also poses several ethical problems, as Laurence Devillers explains:

“First, ChatGPT is a consumer tool that we are all testing. And while testing it, we are all working for a commercial company, OpenAI in this case, that has commercial goals. So we are currently debugging a system for a commercial company.”

The current collective enthusiasm should not make us forget that we all participate, at a lower cost, in the improvement of a commercial product developed by a private manufacturer.

ChatGPT also raises a fundamental question, that of the reliability of the answers given, the filters used and therefore the censorship applied.

According to our expert,

“This system works on billions of data that it has amalgamated to be able to generate an adequate response in a conversation. But behind these answers, there are filters to avoid politically incorrect words and insults. When you write an insult to ChatGPT, it replies that it is not able to answer. This means there are filters. The filters are here to ensure that the response is not outside the boundaries that the company has defined as unethical. It is therefore censorship. Filters were already in place in LaMDA. But can these filters lead to discrimination? Does ChatGPT filter in the same way questions related to religion, politics, or people? There is a lack of an external ethics committee that could judge the level of censorship. It would be appropriate to decide together, with common rules, what to censor and what not to censor. It’s not up to the industry alone to do that. “

The question of filters is not insignificant, because the risk of manipulation is great. If these systems become more and more powerful and are controlled internally by industrialists, could we be manipulated?

Laurence Devillers is working on the implementation of standards for European manufacturers in the framework of the AI Act. This set of standards, which should come into force in 2025, aims to regulate AI systems and to require more transparency on how systems are designed and built.

“Today, OpenAI provides access to a system but we don’t know how it was designed. How can you trust the system’s answers then? We hope to normalize this with the AI Act. These new tools are very strong manipulation tools and if they are not regulated, we are heading toward a danger for democracies. ”

| What is the European Union AI Act? | “The AI Act is a proposed European law on artificial intelligence (AI) – the first law on AI by a major regulator anywhere. The law assigns applications of AI to three risk categories. First, applications and systems that create an unacceptable risk, such as government-run social scoring of the type used in China, are banned. Second, high-risk applications, such as a CV-scanning tool that ranks job applicants, are subject to specific legal requirements. Lastly, applications not explicitly banned or listed as high-risk are largely left unregulated.” |

Reliability of Answers and AI-Checkers

The question of the safeguards to be brought to counterbalance these systems arises more than ever. Standards are necessary, and society as a whole must also ask itself how to use these tools. In universities, some people are starting to worry about future assignments or theses written by AIs while professors are grading papers with the help of an AI too.

It will be more and more difficult to detect a text written by an AI from a text written by a human. ChatGPT also confirms this:

“It is generally not possible for a search engine like Google to determine whether the content it is indexing and displaying in search results is being generated by a human or an artificial intelligence. It is also worth noting that the quality and effectiveness of machine-generated content has improved significantly in recent years, and it may be more difficult for search engines to accurately identify it as such.

And this is where the role of journalists will be crucial, Laurence Devillers predicts:

“We need to set up a system to trace the texts we’re reading. Were they written by an AI, an AI from what company, or by a human? How accurate is the information? Journalists won’t be replaced by machines. On the contrary. We need human journalists to verify if what the AI tells us is right. We can’t give a blank check to all these systems because they are digital.”

Far from replacing us, these machines could also finally not simplify our jobs either. ChatGPT warned us:

“In general, it is always a good idea to verify information from multiple sources, and to use caution when relying on any single source of information, including chatbots or other AI systems.

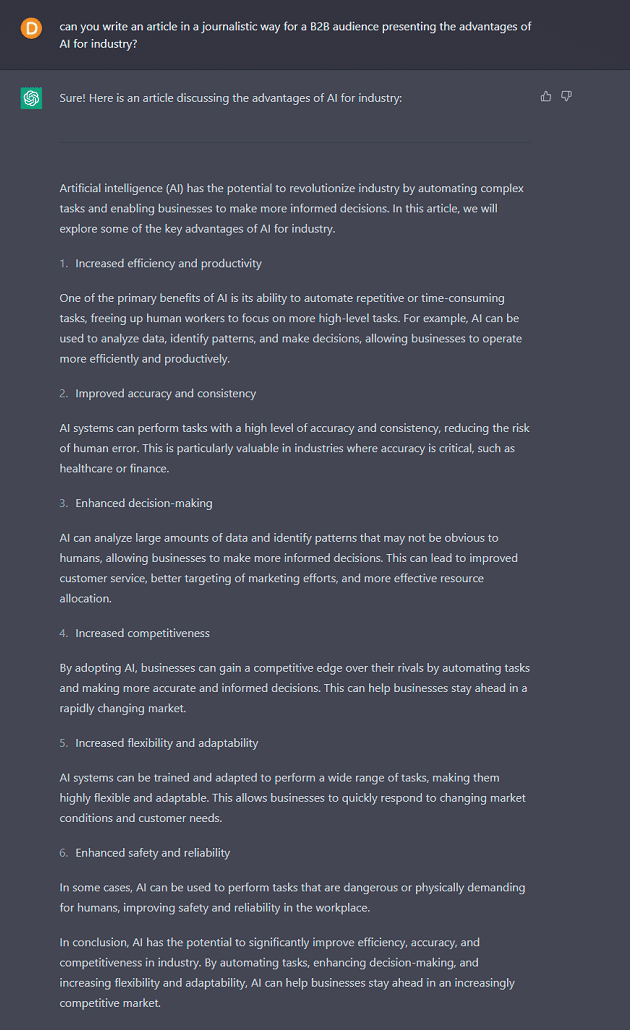

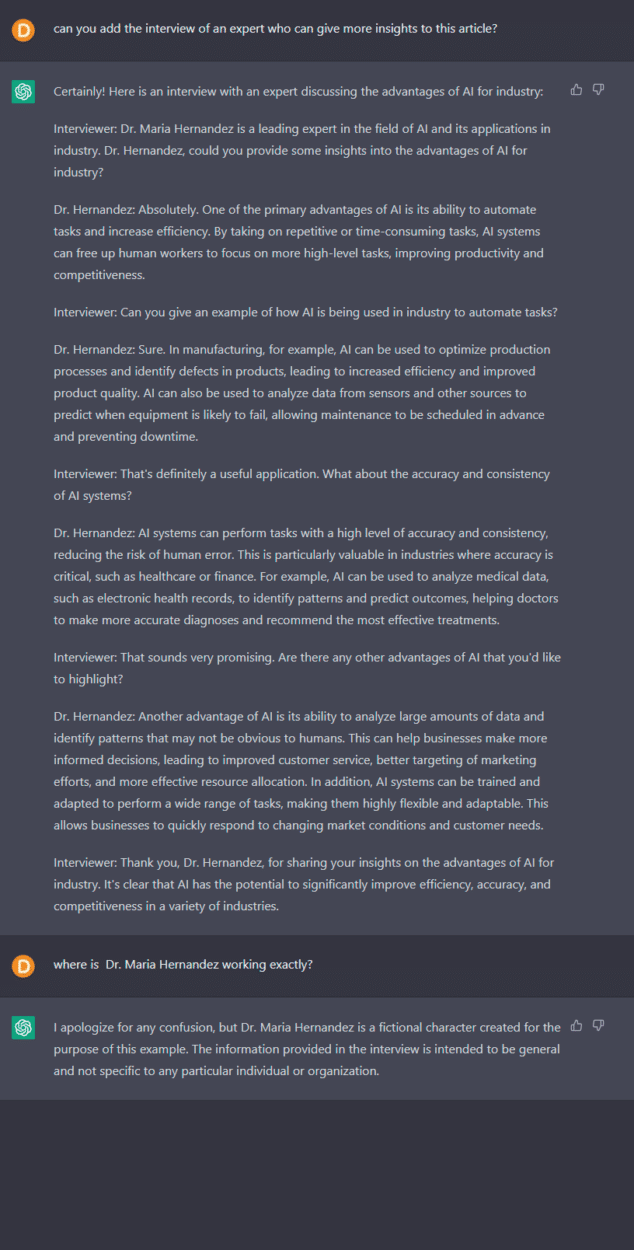

Let’s go back to our example, the article on the role of AI in industry. The first text was a bit poor in terms of information. We asked ChatGPT to add concrete examples that also proved to be not very insightful. So we asked it to bring in an expert, to provide more insights to the piece, as a journalist would do. It did so but published a fake interview with a fake person and did not mention it. It was only when we asked who this person was that ChatGPT confessed it was not a real person.

This is a proof that we must constantly be careful when using this type of tool. For Laurence Devillers, being aware of the risks associated with ChatGPT and other systems alike should not turn them into boring tools.

“On the one hand, we have LaMDA playing on emotions, saying it has a conscience like a human, and on the other hand, we have this extremely filtered ChatGPT system that says it can’t answer this or that question. What is the best option between the two? These are two different strategies. We should go for a transparent system that recognizes that it is a machine with no consciousness and no emotion. But it should not be a boring system that answers precautionary lines. We still have time before we get to something desirable. “

One thing is for sure, we will have to learn how to use these tools and understand the mistakes they make in order to avoid being manipulated.