Since the launch of Amazon’s Alexa, vocal biomarker technology is on the rise and offers promising applications both for the industrial and medical sectors. While some voice recognition tools can analyze speech and predict people’s behavior, others can detect diseases and even COVID-19. The common feature of those tools: they all use machine learning techniques. Our journalists spoke with two start-ups that raise their voices to build a vocal future.

Abigail Saltmarsh and Daniel Allen contributed to this report.

Recently, a growing number of voice tech startups have moved into the healthcare space, inspiring innovations that range from smart assistants for monitoring aging patients and automated transcribers for clinical documentation to Alexa-powered medical devices and even AI-powered hearing aids that can translate myriad languages.

Now such start-ups are leveraging artificial intelligence (AI) in ways that promise to revolutionize the diagnosis of health issues. By developing machine learning-based voice recognition technology, such as that developed for Amazon’s Alexa, they are increasingly able to identify voice patterns specific to different neurological diseases and conditions (known as “vocal biomarkers”). Advances in this area mean that very soon an accurate assessment of a patient’s condition may simply rely on them speaking into a smartphone.

Sonaphi and their COVID-19 Screening

One start-up pioneering the use of such technology is California-based Sonaphi .Sonaphi has developed an AI-empowered biotechnology platform that analyzes the human voice and delivers precise neurofeedback to enhance human health and performance.

How are vocal biomarkers detected? After a person’s voice is recorded, it is translated into an image called a spectrogram. Then machine learning algorithms are used to assess the correlation between a patient’s voice and vocal patterns and a variety of illnesses and symptoms.

This analysis is performed using computer vision techniques to detect small changes in the spectrogram. The algorithms are developed using the voice recordings of people diagnosed with specific diseases or conditions. Their voice recordings, along with other medical information, are collected in clinical trials, transformed to spectrograms, and classified using machine learning to “train” a vocal biomarker. The biomarker is then tested with a different group of patients to see how well it performs—the more data that is collected, the stronger the algorithm and the more accurate the biomarker.

The most innovative aspects of the platform are Sonaphi’s database of 1,300 biomarkers that can be identified from a 30-second voice recording. According to Sonaphi’s Chief Operating Officer David Levesque,

“This enables a skilled clinician to see relationships between chemistry and anatomy that typically require many conventional tests.”

Sonaphi’s current focus is the development of a 60-second voice-based COVID-19 screening mobile app. The Sonaphi Voice algorithm extracts vocal features that have been found to be related to the presence of the virus. For Levesque,

“Conventional medical testing has limitations relating to access, affordability, adaptability, efficiency, and the ability to look at a whole spectrum of biomarkers. All of these challenges have been highlighted by the COVID-19 pandemic. Addressing these challenges, our app will provide accurate, up-to-date, real-time health assessment so that people can return to work, family and fun in a safe and healthy way.”

The algorithms are developed using the voice recordings of people diagnosed with specific diseases or conditions.

Sonaphi’s current focus is the development of a 60-second voice-based Covid-19 screening mobile app, which they plan to launch sometime towards the end of 2021. The Sonaphi Voice algorithm extracts vocal features that have been found to be related to the presence of Covid-19. Levesque said:

“Conventional medical testing has limitations relating to access, affordability, adaptability, efficiency, and the ability to look at a whole spectrum of biomarkers. All of these challenges have been highlighted by the Covid-19 pandemic. Addressing these challenges, our app will provide accurate, up-to-date, real-time health assessment so that people can return to work, family and fun in a safe and healthy way.”

The company expects to launch the app by the end of 2021.

Voicesence and its Speech Patterns

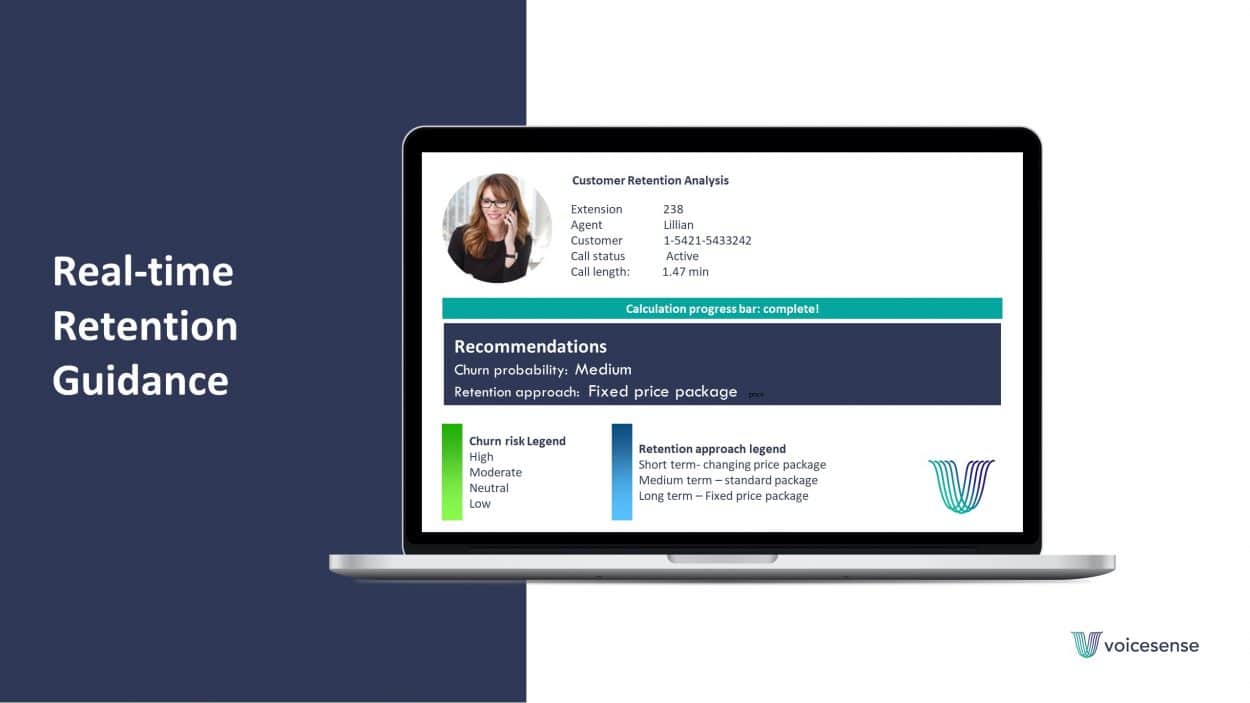

Voice-based technology can also be employed in sales companies, to predict a calling customer’s buying preferences. In Israel, a provider of voice-based technology has launched Voicesense, a tool that can analyze speech in real-time and uses AI algorithms to provide an instant profile of the individual’s behavior. For Yoav Degani, founder and CEO:

“We can not only show that a call is positive or negative, but we can reveal a much more sophisticated pattern. We can predict or assess if someone tends to take risks and guide the agent on whether they might purchase and, if so, what their buying style would be. We can also show if the customer is focused on price-value ratio, quality, brand or innovation, perhaps.”

Voicesense spent several years researching voice-based analytics solutions; correlating different speech patterns with behavioral tendencies and building databases. Initially, it focused on generating complete personality profiles based entirely on the non-content features of an individual’s speech – intonation, pace and emphasis. Now, says Mr. Degani, this has been taken further:

“We can measure over 200 different parameters each second within the call and can generate the typical speech patterns of the individual. Today we can do that in real time, as well as offline: we can do it over the cloud or on the premises.”

The technology can be integrated into a customer’s own system and connects back to Voicesense’s servers. It does not involve significant investment in hardware or infrastructure explains Mr. Degani.

“We stream the audio in real time directly to our server for analysis and then present the data back to the users. We can analyze over 1,000 calls simultaneously on the same server in real time – another server calculates the predicative scores and we provide APIs back into the customer’s system. When the agents open their system they can see our analysis incorporated into their database.”

Like Sonaphi, Voicesense uses AI to build and update its predictive models. The technology is suitable for sales companies, where it can help recruit and train as well as increase purchasing. But it also offers a wide range of applications, explains Mr. Degani:

“We can analyze agents as well, predicting who will excel at selling and who at customer service. Fintech is another area of application. In the world of banking, lending and loans, risk assessments are essential. These companies have information about their customers (credit scores etc.) but they know little about their behavioral patterns. They do not know if someone is impulsive, takes risks or has integrity – and these factors play a critical role in someone’s financial decisions. And finally, this technology could become part of the big trends we are seeing in digital health. Someone with depression might need to see a doctor once a month. If we can analyze their speech patterns through their smartphone, upload the data to the cloud and send a report to the hospital or therapist, there could be significant implications.”